Then the airflow docker should be able to find your database by specifying network_mode='your_network_name'.

#AIRFLOW DAG LOGGING DRIVER#

Your_network_name: # Add this network definitionĭriver: bridge # Use the bridge driver or specify a different driver if needed One example of an Airflow deployment running on a distributed set of five nodes in a Kubernetes cluster is shown below. The worker pod then runs the task, reports the result, and terminates. For each schedule, (say daily or hourly), the DAG needs to run each individual tasks as their dependencies. When a DAG submits a task, the KubernetesExecutor requests a worker pod from the Kubernetes API. A dag also has a schedule, a start date and an end date (optional).

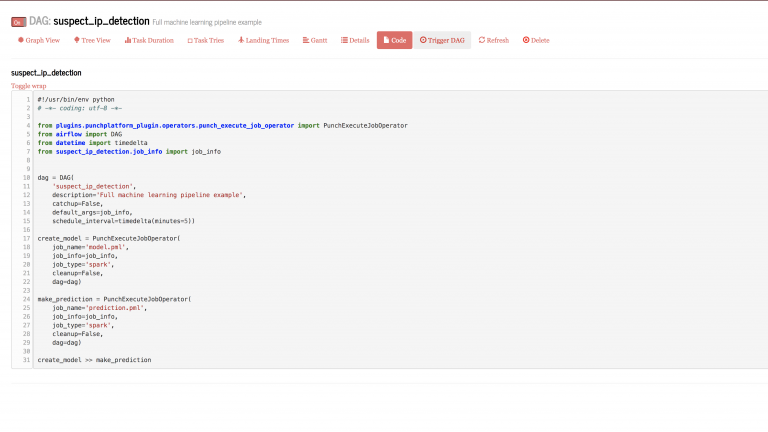

The following code snippets show examples of. A dag (directed acyclic graph) is a collection of tasks with directional dependencies. You have to add networks to your docker-compose file: version: '3.4' Apache Airflow is an Open-source process automation and scheduling application that allows you to programmatically author, schedule, and monitor workflows. An Airflow DAG is defined in a Python file and is composed of the following components: A DAG definition, operators, and operator relationships. The answer to your questions are networks. Mkdir -p /sources/logs /sources/dags /sources/pluginsĬhown -R "$įrom import BashOperatorįrom _operator import DockerOperator Conclusion Congratulations Youve run a DAG using the Astro dbt provider to automatically create tasks from dbt models. postgres-db-volume:/var/lib/postgresql/data You can learn more about a manifest-based dbt and Airflow project structure, view example code, and read about the DbtDagParser in a 3-part blog post series on Building a Scalable Analytics Architecture With Airflow and dbt. postgres_data:/var/lib/postgresql/data/ services/backend/src:/opt/airflow/dagsĬondition: service_completed_successfullyĬommand: uvicorn src.main:app -host 0.0.0.0 -port 5000 -workers 2 var/run/docker.sock:/var/run/docker.sock

#AIRFLOW DAG LOGGING INSTALL#

PS: I wanted to establish a DockerOperator so that I do not have to install all the pyspark dependencies on the airflow docker to separate the services better. It seems like Airflow is unable to resolve the hostname "test_db_3" when attempting to establish a connection to the database.Īny ideas on how I can resolve this issue and establish a successful connection to the PostgreSQL database within the Airflow DAG?

However, when I execute the DAG file in Airflow's UI, I encounter the following error: Py4JJavaError: 4JJavaError: An error occurred while calling o36.jdbc. I am setting up a workflow using Airflow, PySpark, and a PostgreSQL database, all running in Docker Compose.

0 kommentar(er)

0 kommentar(er)